TL;DR:

CIOs face mounting stress to undertake agentic AI — however skipping steps results in price overruns, compliance gaps, and complexity you possibly can’t unwind. This submit outlines a better, staged path that can assist you scale AI with management, readability, and confidence.

AI leaders are beneath immense stress to implement options which might be each cost-effective and safe. The problem lies not solely in adopting AI but in addition in retaining tempo with developments that may really feel overwhelming.

This usually results in the temptation to dive headfirst into the newest improvements to remain aggressive.

Nonetheless, leaping straight into complicated multi-agent methods and not using a strong basis is akin to establishing the higher flooring of a constructing earlier than laying its base, leading to a construction that’s unstable and probably hazardous.

On this submit, we stroll by find out how to information your group by every stage of agentic AI maturity — securely, effectively, and with out expensive missteps.

Understanding key AI ideas

Earlier than delving into the levels of AI maturity, it’s important to ascertain a transparent understanding of key ideas:

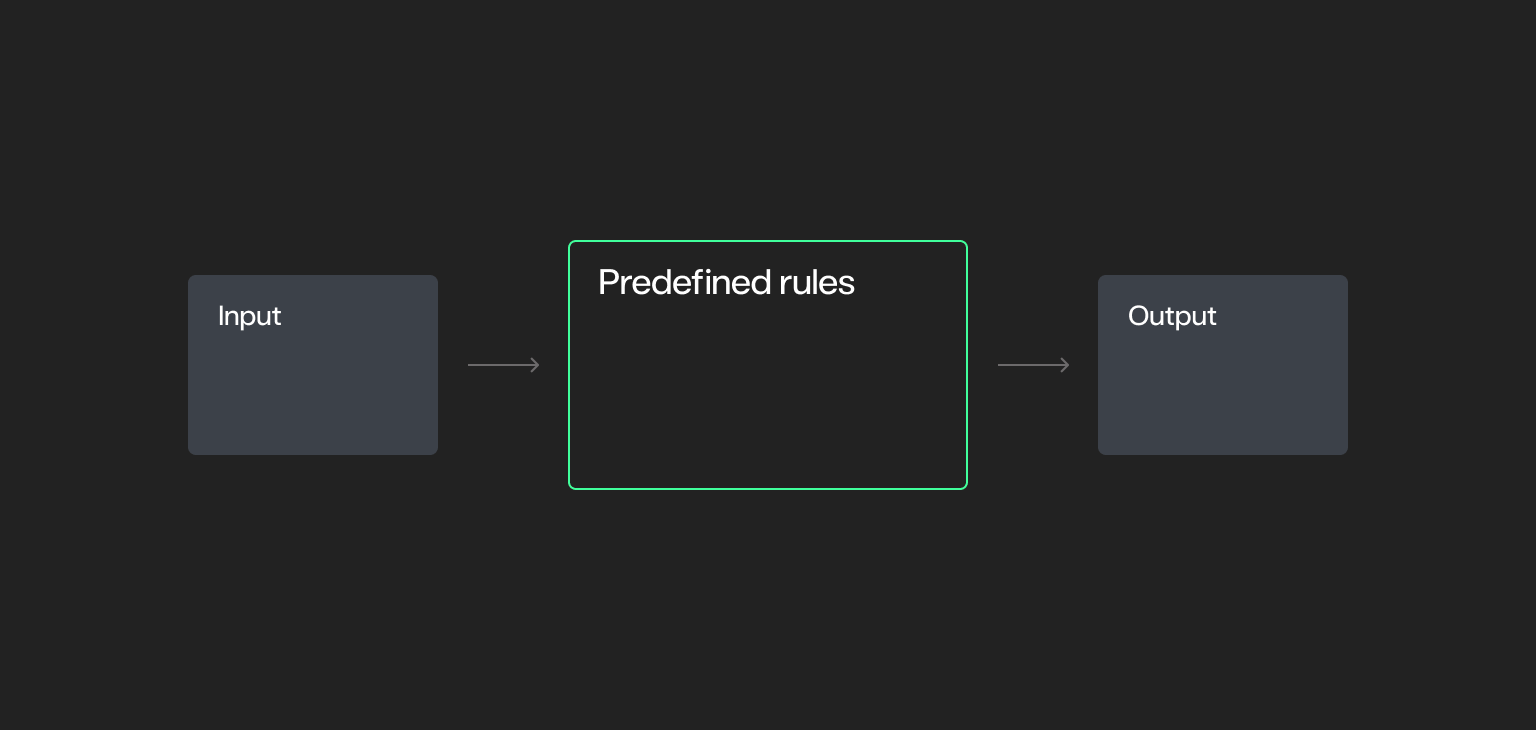

Deterministic methods

Deterministic methods are the foundational constructing blocks of automation.

- Comply with a set set of predefined guidelines the place the end result is absolutely predictable. Given the identical enter, the system will all the time produce the identical output.

- Doesn’t incorporate randomness or ambiguity.

- Whereas all deterministic methods are rule-based, not all rule-based methods are deterministic.

- Supreme for duties requiring consistency, traceability, and management.

- Examples: Fundamental automation scripts, legacy enterprise software program, and scheduled knowledge switch processes.

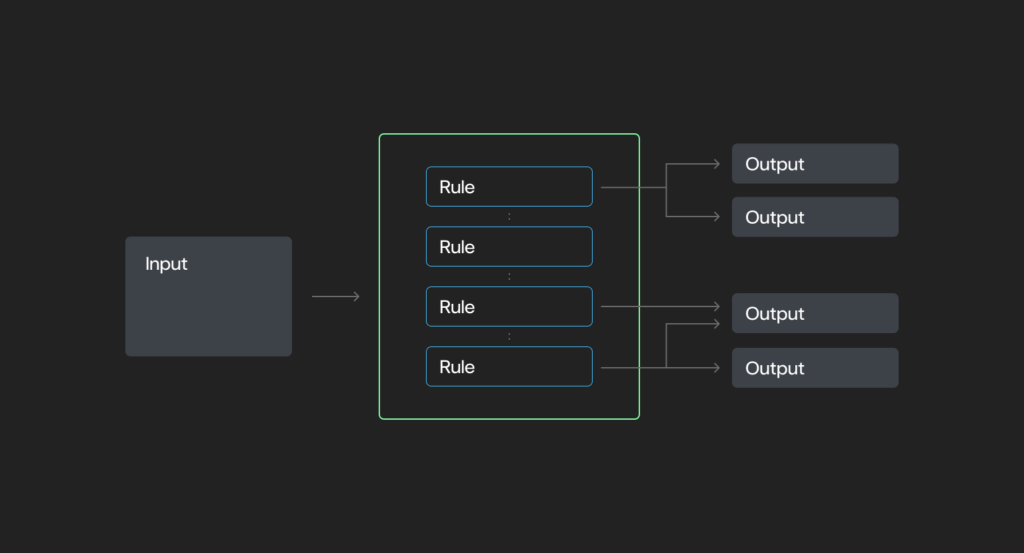

Rule-based methods

A broader class that features deterministic methods however may also introduce variability (e.g., stochastic habits).

- Function primarily based on a set of predefined situations and actions — “if X, then Y.”

- Might incorporate: deterministic methods or stochastic components, relying on design.

- Highly effective for implementing construction.

- Lack autonomy or reasoning capabilities.

- Examples: E mail filters, Robotic Course of Automation (RPA) ) and complicated infrastructure protocols like web routing.

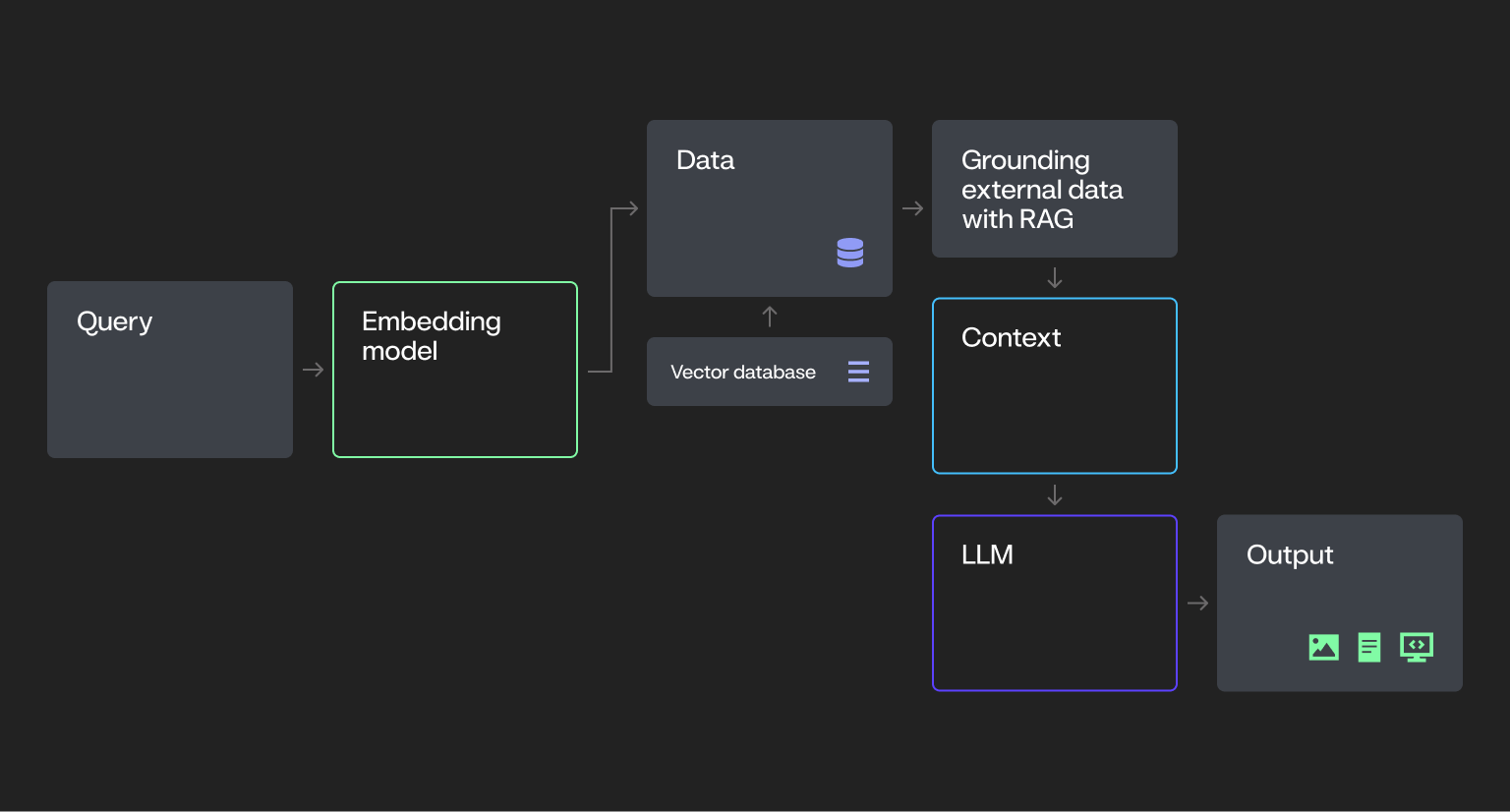

Course of AI

A step past rule-based methods.

- Powered by Massive Language Fashions (LLMs) and Imaginative and prescient-Language Fashions (VLMs)

- Skilled on intensive datasets to generate numerous content material (e.g., textual content, photos, code) in response to enter prompts.

- Responses are grounded in pre-trained information and might be enriched with exterior knowledge through strategies like Retrieval-Augmented Technology (RAG).

- Doesn’t make autonomous choices — operates solely when prompted.

- Examples: Generative AI chatbots, summarization instruments, and content-generation purposes powered by LLMs.

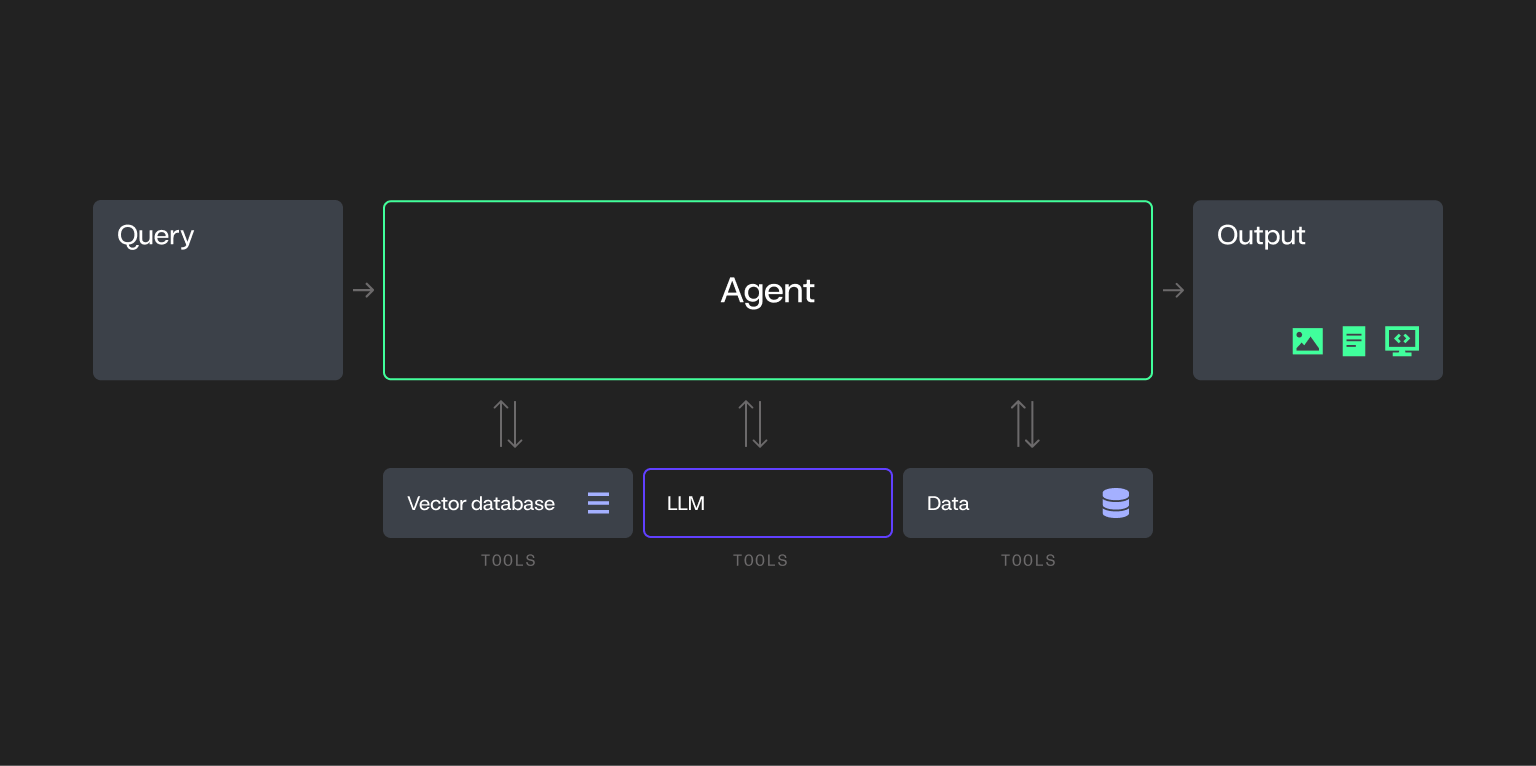

Single-agent methods

Introduce autonomy, planning, and power utilization, elevating foundational AI into extra complicated territory.

- AI-driven applications designed to carry out particular duties independently.

- Can combine with exterior instruments and methods (e.g., databases or APIs) to finish duties.

- Don’t collaborate with different brokers — function alone inside a process framework.

- To not be confused with RPA: RPA is good for extremely standardized, rules-based duties the place logic doesn’t require reasoning or adaptation.

- Examples: AI-driven assistants for forecasting, monitoring, or automated process execution that function independently.

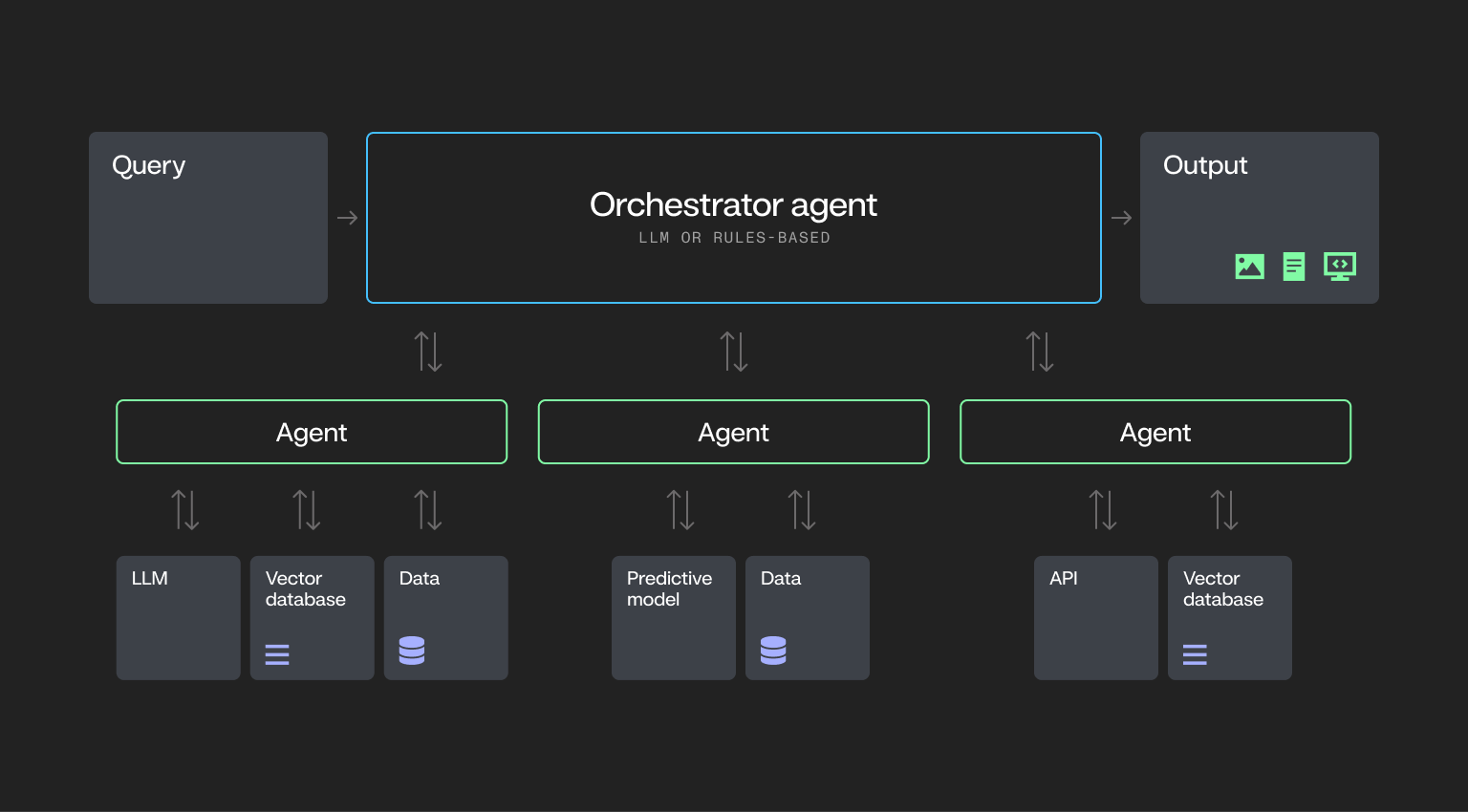

Multi-agent methods

Essentially the most superior stage, that includes distributed decision-making, autonomous coordination, and dynamic workflows.

- Comprised of a number of AI brokers that work together and collaborate to attain complicated aims.

- Brokers dynamically determine which instruments to make use of, when, and in what sequence.

- Capabilities embody planning, reflection, reminiscence utilization, and cross-agent collaboration.

- Examples: Distributed AI methods coordinating throughout departments like provide chain, customer support, or fraud detection.

What makes an AI system really agentic?

To be thought of really agentic, an AI system sometimes demonstrates core capabilities that allow it to function with autonomy and flexibility:

- Planning. The system can break down a process into steps and create a plan of execution.

- Instrument calling. The AI selects and makes use of instruments (e.g., fashions, capabilities) and initiates API calls to work together with exterior methods to finish duties.

- Adaptability. The system can alter its actions in response to altering inputs or environments, making certain efficient efficiency throughout various contexts.

- Reminiscence. The system retains related info throughout steps or classes.

These traits align with extensively accepted definitions of agentic AI, together with frameworks mentioned by AI leaders akin to Andrew Ng.

With these definitions in thoughts, let’s discover the levels required to progress towards implementing multi-agent methods.

Understanding agentic AI maturity levels

For the needs of simplicity, we’ve delineated the trail to extra complicated agentic flows into three levels. Every stage presents distinctive challenges and alternatives regarding price, safety, and governance.

Stage 1: Course of AI

What this stage seems to be like

Within the Course of AI stage, organizations sometimes pilot generative AI by remoted use circumstances like chatbots, doc summarization, or inner Q&A. These efforts are sometimes led by innovation groups or particular person enterprise models, with restricted involvement from IT.

Deployments are constructed round a single LLM and function exterior core methods like ERP or CRM, making integration and oversight troublesome.

Infrastructure is commonly pieced collectively, governance is casual, and safety measures could also be inconsistent.

Provide chain instance for course of AI

Within the Course of AI stage, a provide chain workforce would possibly use a generative AI-powered chatbot to summarize cargo knowledge or reply fundamental vendor queries primarily based on inner paperwork. This instrument can pull in knowledge by a RAG workflow to offer insights, however it doesn’t take any motion autonomously.

For instance, the chatbot might summarize stock ranges, predict demand primarily based on historic developments, and generate a report for the workforce to overview. Nonetheless, the workforce should then determine what motion to take (e.g., place restock orders or alter provide ranges).

The system merely supplies insights — it doesn’t make choices or take actions.

Widespread obstacles

Whereas early AI initiatives can present promise, they usually create operational blind spots that stall progress, drive up prices, and enhance threat if left unaddressed.

- Knowledge integration and high quality. Most organizations wrestle to unify knowledge throughout disconnected methods, limiting the reliability and relevance of generative AI output.

- Scalability challenges. Pilot tasks usually stall when groups lack the infrastructure, entry, or technique to maneuver from proof of idea to manufacturing.

- Insufficient testing and stakeholder alignment. Generative outputs are continuously launched with out rigorous QA or enterprise person acceptance, resulting in belief and adoption points.

- Change administration friction. As generative AI reshapes roles and workflows, poor communication and planning can create organizational resistance.

- Lack of visibility and traceability. With out mannequin monitoring or auditability, it’s obscure how choices are made or pinpoint the place errors happen.

- Bias and equity dangers. Generative fashions can reinforce or amplify bias in coaching knowledge, creating reputational, moral, or compliance dangers.

- Moral and accountability gaps. AI-generated content material can blur moral traces or be misused, elevating questions round accountability and management.

- Regulatory complexity. Evolving international and industry-specific rules make it troublesome to make sure ongoing compliance at scale.

Instrument and infrastructure necessities

Earlier than advancing to extra autonomous methods, organizations should guarantee their infrastructure is provided to assist safe, scalable, and cost-effective AI deployment.

- Quick, versatile vector database updates to handle embeddings as new knowledge turns into obtainable.

- Scalable knowledge storage to assist massive datasets used for coaching, enrichment, and experimentation.

- Enough compute sources (CPUs/GPUs) to energy coaching, tuning, and operating fashions at scale.

- Safety frameworks with enterprise-grade entry controls, encryption, and monitoring to guard delicate knowledge.

- Multi-model flexibility to check and consider completely different LLMs and decide the very best match for particular use circumstances.

- Benchmarking instruments to visualise and examine mannequin efficiency throughout assessments and testing.

- Reasonable, domain-specific knowledge to check responses, simulate edge circumstances, and validate outputs.

- A QA prototyping atmosphere that helps fast setup, person acceptance testing, and iterative suggestions.

- Embedded safety, AI, and enterprise logic for consistency, guardrails, and alignment with organizational requirements.

- Actual-time intervention and moderation instruments for IT and safety groups to watch and management AI outputs in actual time.

- Sturdy knowledge integration capabilities to attach sources throughout the group and guarantee high-quality inputs.

- Elastic infrastructure to scale with demand with out compromising efficiency or availability.

- Compliance and audit tooling that permits documentation, change monitoring, and regulatory adherence.

Making ready for the subsequent stage

To construct on early generative AI efforts and put together for extra autonomous methods, organizations should lay a strong operational and organizational basis.

- Put money into AI-ready knowledge. It doesn’t have to be excellent, however it should be accessible, structured, and safe to assist future workflows.

- Use vector database visualizations. This helps groups establish information gaps and validate the relevance of generative responses.

- Apply business-driven QA/UAT. Prioritize acceptance testing with the top customers who will depend on generative output, not simply technical groups.

- Get up a safe AI registry. Monitor mannequin variations, prompts, outputs, and utilization throughout the group to allow traceability and auditing.

- Implement baseline governance. Set up foundational frameworks like role-based entry management (RBAC), approval flows, and knowledge lineage monitoring.

- Create repeatable workflows. Standardize the AI improvement course of to maneuver past one-off experimentation and allow scalable output.

- Construct traceability into generative AI utilization. Guarantee transparency round knowledge sources, immediate development, output high quality, and person exercise.

- Mitigate bias early. Use numerous, consultant datasets and commonly audit mannequin outputs to establish and tackle equity dangers.

- Collect structured suggestions. Set up suggestions loops with finish customers to catch high quality points, information enhancements, and refine use circumstances.

- Encourage cross-functional oversight. Contain authorized, compliance, knowledge science, and enterprise stakeholders to information technique and guarantee alignment.

Key takeaways

Course of AI is the place most organizations start — however it’s additionally the place many get caught. With out robust knowledge foundations, clear governance, and scalable workflows, early experiments can introduce extra threat than worth.

To maneuver ahead, CIOs have to shift from exploratory use circumstances to enterprise-ready methods — with the infrastructure, oversight, and cross-functional alignment required to assist secure, safe, and cost-effective AI adoption at scale.

Stage 2: Single-agent methods

What this stage seems to be like

At this stage, organizations start tapping into true agentic AI — deploying single-agent methods that may act independently to finish duties. These brokers are able to planning, reasoning, and calling instruments like APIs or databases to get work achieved with out human involvement.

In contrast to earlier generative methods that anticipate prompts, single-agent methods can determine when and find out how to act inside an outlined scope.

This marks a transparent step into autonomous operations—and a essential inflection level in a company’s AI maturity.

Provide chain instance for single-agent methods

Let’s revisit the availability chain instance. With a single-agent system in place, the workforce can now autonomously handle stock. The system displays real-time inventory ranges throughout regional warehouses, forecasts demand utilizing historic developments, and locations restock orders mechanically through an built-in procurement API—with out human enter.

In contrast to the method AI stage, the place a chatbot solely summarizes knowledge or solutions queries primarily based on prompts, the single-agent system acts autonomously. It makes choices, adjusts stock, and locations orders inside a predefined workflow.

Nonetheless, as a result of the agent is making impartial choices, any errors in configuration or missed edge circumstances (e.g., sudden demand spikes) might end in points like stockouts, overordering, or pointless prices.

This can be a essential shift. It’s not nearly offering info anymore; it’s in regards to the system making choices and executing actions, making governance, monitoring, and guardrails extra essential than ever.

Widespread obstacles

As single-agent methods unlock extra superior automation, many organizations run into sensible roadblocks that make scaling troublesome.

- Legacy integration challenges. Many single-agent methods wrestle to attach with outdated architectures and knowledge codecs, making integration technically complicated and resource-intensive.

- Latency and efficiency points. As brokers carry out extra complicated duties, delays in processing or instrument calls can degrade person expertise and system reliability.

- Evolving compliance necessities. Rising rules and moral requirements introduce uncertainty. With out sturdy governance frameworks, staying compliant turns into a transferring goal.

- Compute and expertise calls for. Working agentic methods requires important infrastructure and specialised expertise, placing stress on budgets and headcount planning.

- Instrument fragmentation and vendor lock-in. The nascent agentic AI panorama makes it exhausting to decide on the best tooling. Committing to a single vendor too early can restrict flexibility and drive up long-term prices.

- Traceability and power name visibility. Many organizations lack the required stage of observability and granular intervention required for these methods. With out detailed traceability and the power to intervene at a granular stage, methods can simply run amok, resulting in unpredictable outcomes and elevated threat.

Instrument and infrastructure necessities

At this stage, your infrastructure must do extra than simply assist experimentation—it must maintain brokers linked, operating easily, and working securely at scale.

- Integration platform with instruments that facilitate seamless connectivity between the AI agent and your core enterprise methods, making certain clean knowledge movement throughout environments.

- Monitoring methods designed to trace and analyze the agent’s efficiency and outcomes, flag points, and floor insights for ongoing enchancment.

- Compliance administration instruments that assist implement AI insurance policies and adapt rapidly to evolving regulatory necessities.

- Scalable, dependable storage to deal with the rising quantity of information generated and exchanged by AI brokers.

- Constant compute entry to maintain brokers performing effectively beneath fluctuating workloads.

- Layered safety controls that defend knowledge, handle entry, and preserve belief as brokers function throughout methods.

- Dynamic intervention and moderation that may perceive processes aren’t adhering to insurance policies, intervene in real-time and ship alerts for human intervention.

Making ready for the subsequent stage

Earlier than layering on further brokers, organizations have to take inventory of what’s working, the place the gaps are, and find out how to strengthen coordination, visibility, and management at scale.

- Consider present brokers. Determine efficiency limitations, system dependencies, and alternatives to enhance or broaden automation.

- Construct coordination frameworks. Set up methods that may assist seamless interplay and task-sharing between future brokers.

- Strengthen observability. Implement monitoring instruments that present real-time insights into agent habits, outputs, and failures on the instrument stage and the agent stage.

- Interact cross-functional groups. Align AI targets and threat administration methods throughout IT, authorized, compliance, and enterprise models.

- Embed automated coverage enforcement. Construct in mechanisms that uphold safety requirements and assist regulatory compliance as agent methods broaden.

Key takeaways

Single-agent methods provide important functionality by enabling autonomous actions that improve operational effectivity. Nonetheless, they usually include larger prices in comparison with non-agentic RAG workflows, like these within the course of AI stage, in addition to elevated latency and variability in response occasions.

Since these brokers make choices and take actions on their very own, they require tight integration, cautious governance, and full traceability.

If foundational controls like observability, governance, safety, and auditability aren’t firmly established within the course of AI stage, these gaps will solely widen, exposing the group to higher dangers round price, compliance, and model fame.

Stage 3: Multi-agent methods

What this stage seems to be like

On this stage, a number of AI brokers work collectively — every with its personal process, instruments, and logic — to attain shared targets with minimal human involvement. These brokers function autonomously, however additionally they coordinate, share info, and alter their actions primarily based on what others are doing.

In contrast to single-agent methods, choices aren’t made in isolation. Every agent acts primarily based by itself observations and context, contributing to a system that behaves extra like a workforce, planning, delegating, and adapting in actual time.

This sort of distributed intelligence unlocks highly effective use circumstances and large scale. However as one can think about, it additionally introduces important operational complexity: overlapping choices, system interdependencies, and the potential for cascading failures if brokers fall out of sync.

Getting this proper calls for robust structure, real-time observability, and tight controls.

Provide chain instance for multi-agent methods

In earlier levels, a chatbot was used to summarize shipments and a single-agent system was deployed to automate stock restocking.

On this instance, a community of AI brokers are deployed, every specializing in a unique a part of the operation, from forecasting and video evaluation to scheduling and logistics.

When an sudden cargo quantity is forecasted, brokers kick into motion:

- A forecasting agent tasks capability wants.

- A pc imaginative and prescient agent analyzes dwell warehouse footage to seek out underutilized house.

- A delay prediction agent faucets time sequence knowledge to anticipate late arrivals.

These brokers talk and coordinate in actual time, adjusting workflows, updating the warehouse supervisor, and even triggering downstream adjustments like rescheduling vendor pickups.

This stage of autonomy unlocks pace and scale that handbook processes can’t match. However it additionally means one defective agent — or a breakdown in communication — can ripple throughout the system.

At this stage, visibility, traceability, intervention, and guardrails develop into non-negotiable.

Widespread obstacles

The shift to multi-agent methods isn’t only a step up in functionality — it’s a leap in complexity. Every new agent added to the system introduces new variables, new interdependencies, and new methods for issues to interrupt in case your foundations aren’t strong.

- Escalating infrastructure and operational prices. Working multi-agent methods is pricey—particularly as every agent drives further API calls, orchestration layers, and real-time compute calls for. Prices compound rapidly throughout a number of fronts:

- Specialised tooling and licenses. Constructing and managing agentic workflows usually requires area of interest instruments or frameworks, rising prices and limiting flexibility.

- Useful resource-intensive compute. Multi-agent methods demand high-performance {hardware}, like GPUs, which might be expensive to scale and troublesome to handle effectively.

- Scaling the workforce. Multi-agent methods require area of interest experience throughout AI, MLOps, and infrastructure — usually including headcount and rising payroll prices in an already aggressive expertise market.

- Operational overhead. Even autonomous methods want hands-on assist. Standing up and sustaining multi-agent workflows usually requires important handbook effort from IT and infrastructure groups, particularly throughout deployment, integration, and ongoing monitoring.

- Deployment sprawl. Managing brokers throughout cloud, edge, desktop, and cell environments introduces considerably extra complexity than predictive AI, which usually depends on a single endpoint. As compared, multi-agent methods usually require 5x the coordination, infrastructure, and assist to deploy and preserve.

- Misaligned brokers. With out robust coordination, brokers can take conflicting actions, duplicate work, or pursue targets out of sync with enterprise priorities.

- Safety floor growth. Every further agent introduces a brand new potential vulnerability, making it more durable to guard methods and knowledge end-to-end.

- Vendor and tooling lock-in. Rising ecosystems can result in heavy dependence on a single supplier, making future adjustments expensive and disruptive.

- Cloud constraints. When multi-agent workloads are tied to a single supplier, organizations threat operating into compute throttling, burst limits, or regional capability points—particularly as demand turns into much less predictable and more durable to regulate.

- Autonomy with out oversight. Brokers might exploit loopholes or behave unpredictably if not tightly ruled, creating dangers which might be exhausting to comprise in actual time.

- Dynamic useful resource allocation. Multi-agent workflows usually require infrastructure that may reallocate compute (e.g., GPUs, CPUs) in actual time—including new layers of complexity and price to useful resource administration.

- Mannequin orchestration complexity. Coordinating brokers that depend on numerous fashions or reasoning methods introduces integration overhead and will increase the chance of failure throughout workflows.

- Fragmented observability. Tracing choices, debugging failures, or figuring out bottlenecks turns into exponentially more durable as agent depend and autonomy develop.

- No clear “achieved.” With out robust process verification and output validation, brokers can drift off-course, fail silently, or burn pointless compute.

Instrument and infrastructure necessities

As soon as brokers begin making choices and coordinating with one another, your methods have to do extra than simply sustain — they should keep in management. These are the core capabilities to have in place earlier than scaling multi-agent workflows in manufacturing.

- Elastic compute sources. Scalable entry to GPUs, CPUs, and high-performance infrastructure that may be dynamically reallocated to assist intensive agentic workloads in actual time.

- Multi-LLM entry and routing. Flexibility to check, examine, and route duties throughout completely different LLMs to regulate prices and optimize efficiency by use case.

- Autonomous system safeguards. Constructed-in safety frameworks that forestall misuse, defend knowledge integrity, and implement compliance throughout distributed agent actions.

- Agent orchestration layer. Workflow orchestration instruments that coordinate process delegation, instrument utilization, and communication between brokers at scale.

- Interoperable platform structure. Open methods that assist integration with numerous instruments and applied sciences, serving to you keep away from lock-in and enabling long-term flexibility.

- Finish-to-end dynamic observability and intervention. Monitoring, moderation, and traceability instruments that not solely floor agent habits, detect anomalies, and assist real-time intervention, but in addition adapt as brokers evolve. These instruments can establish when brokers try to take advantage of loopholes or create new ones, triggering alerts or halting processes to re-engage human oversight

Making ready for the subsequent stage

There’s no playbook for what comes after multi-agent methods, however organizations that put together now would be the ones shaping what comes subsequent. Constructing a versatile, resilient basis is one of the best ways to remain forward of fast-moving capabilities, shifting rules, and evolving dangers.

- Allow dynamic useful resource allocation. Infrastructure ought to assist real-time reallocation of GPUs, CPUs, and compute capability as agent workflows evolve.

- Implement granular observability. Use superior monitoring and alerting instruments to detect anomalies and hint agent habits on the most detailed stage.

- Prioritize interoperability and adaptability. Select instruments and platforms that combine simply with different methods and assist hot-swapping elements and streamlined CI/CD workflows so that you’re not locked into one vendor or tech stack.

- Construct multi-cloud fluency. Guarantee your groups can work throughout cloud platforms to distribute workloads effectively, cut back bottlenecks, keep away from provider-specific limitations, and assist long-term flexibility.

- Centralize AI asset administration. Use a unified registry to control entry, deployment, and versioning of all AI instruments and brokers.

- Evolve safety together with your brokers. Implement adaptive, context-aware safety protocols that reply to rising threats in actual time.

- Prioritize traceability. Guarantee all agent choices are logged, explainable, and auditable to assist investigation and steady enchancment.

- Keep present with instruments and techniques. Construct methods and workflows that may constantly check and combine new fashions, prompts, and knowledge sources.

Key takeaways

Multi-agent methods promise scale, however with out the best basis, they’ll amplify your issues, not clear up them.

As brokers multiply and choices develop into extra distributed, even small gaps in governance, integration, or safety can cascade into expensive failures.

AI leaders who succeed at this stage gained’t be those chasing the flashiest demos—they’ll be those who deliberate for complexity earlier than it arrived.

Advancing to agentic AI with out shedding management

AI maturity doesn’t occur suddenly. Every stage — from early experiments to multi-agent methods— brings new worth, but in addition new complexity. The important thing isn’t to hurry ahead. It’s to maneuver with intention, constructing on robust foundations at each step.

For AI leaders, this implies scaling AI in methods which might be cost-effective, well-governed, and resilient to alter.

You don’t should do every little thing proper now, however the choices you make now form how far you’ll go.

Need to evolve by your AI maturity safely and effectively? Request a demo to see how our Agentic AI Apps Platform ensures safe, cost-effective progress at every stage.

In regards to the writer

Lisa Aguilar is VP of Product Advertising and marketing and Subject CTOs at DataRobot, the place she is accountable for constructing and executing the go-to-market technique for his or her AI-driven forecasting product line. As a part of her function, she companions carefully with the product administration and improvement groups to establish key options that may tackle the wants of outlets, producers, and monetary service suppliers with AI. Previous to DataRobot, Lisa was at ThoughtSpot, the chief in Search and AI-Pushed Analytics.

Dr. Ramyanshu (Romi) Datta is the Vice President of Product for AI Platform at DataRobot, accountable for capabilities that allow orchestration and lifecycle administration of AI Brokers and Functions. Beforehand he was at AWS, main product administration for AWS’ AI Platforms – Amazon Bedrock Core Methods and Generative AI on Amazon SageMaker. He was additionally GM for AWS’s Human-in-the-Loop AI companies. Previous to AWS, Dr. Datta has additionally held engineering and product roles at IBM and Nvidia. He obtained his M.S. and Ph.D. levels in Pc Engineering from the College of Texas at Austin, and his MBA from College of Chicago Sales space College of Enterprise. He’s a co-inventor of 25+ patents on topics starting from Synthetic Intelligence, Cloud Computing & Storage to Excessive-Efficiency Semiconductor Design and Testing.

Dr. Debadeepta Dey is a Distinguished Researcher at DataRobot, the place he leads dual-purpose strategic analysis initiatives. These initiatives deal with advancing the elemental state-of-the-art in Deep Studying and Generative AI, whereas additionally fixing pervasive issues confronted by DataRobot’s clients, with the objective of enabling them to derive worth from AI. He accomplished his PhD in AI and Robotics from The Robotics Institute, Carnegie Mellon College in 2015. From 2015 to 2024, he was a researcher at Microsoft Analysis. His main analysis pursuits embody Reinforcement Studying, AutoML, Neural Structure Search, and high-dimensional planning. He commonly serves as Space Chair at ICML, NeurIPS, and ICLR, and has printed over 30 papers in top-tier AI and Robotics journals and conferences. His work has been acknowledged with a Greatest Paper of the Yr Shortlist nomination on the Worldwide Journal of Robotics Analysis.